Everything You Wanted to Know About AI Security but Were Afraid to Ask

6 de setembro de 2023According to Etay Maor, Senior Director Security Strategy at Cato Networks, “Generative AI can also help criminals“

There’s been a great deal of AI hype recently, but that doesn’t mean the robots are here to replace us. This article sets the record straight and explains how businesses should approach AI.

From musing about self-driving cars to fearing AI bots that could destroy the world, there has been a great deal of AI hype in the past few years. AI has captured our imaginations, dreams, and occasionally, our nightmares.

However, the reality is that AI is currently much less advanced than we anticipated it would be by now. Autonomous cars, for example, often considered the poster child of AI’s limitless future, represent a narrow use case and are not yet a common application across all transportation sectors.

In this article, we de-hype AI, provide tools for businesses approaching AI and share information to help stakeholders educate themselves.

AI Terminology De-Hyped

AI vs. ML

AI (Artificial Intelligence) and ML (Machine Learning) are terms that are often used interchangeably, but they represent different concepts.

AI aims to create intelligence, which means cognitive abilities and the capacity to pass the Turing test. It works by taking what it has learned and elevating it to the next level. The goal of using AI is to replicate human actions, such as creating a cleaning robot that operates in a manner similar to a human cleaner.

ML is a subset of AI. It comprises mathematical models and its abilities are based on combining machines with data. ML works by learning lessons from events and then prioritizing those lessons.

As a result, ML can perform actions that humans cannot, like going over vast reams of data, figuring out patterns, predicting probabilities, and more.

Narrow vs. General AI

The concept of General AI is the one that often scares most people, since it’s the epitome of our “robot overlords” replacing human beings. However, while this idea is technically possible, we are not at that stage right now.

Unlike General AI, Narrow AI is a specialized form of AI that is tuned for very specific tasks. This focus allows supporting humans, relieving us from work that is too demanding or potentially harmful. It is not intended to replace us. Narrow AI is already being applied across industries, like for building cars or packaging boxes. In cybersecurity, Narrow AI can analyze activity data and logs, searching for anomalies or signs of an attack.

AI and ML in the Wild

There are three common models of AI and ML in the wild: generative AI, supervised ML and unsupervised ML.

Generative AI

Generative AI is a cutting-edge field in AI, characterized by models, like LLMs, that are trained on a corpus of knowledge.

The generative AI technology has the ability to generate new content based on the information contained within that corpus. Generative AI has been described as a form of “autocorrect” or “type ahead,” but on steroids.

Examples of Generative AI applications include ChatGPT, Bing, Bard, Dall-E, and specialized cyber assistants, like IBM Security QRadar Advisor with Watson or MSFT Security CoPilot.

Generative AI is best suited for use cases like brainstorming, assisted copyediting and conducting research against a trusted corpus.

Cybersecurity professionals, like SOC and fusion teams, can leverage Generative AI for research, to help them understand zero-day vulnerabilities, network topologies, or new indicators of compromise (IoC).

It’s important to recognize that Generative AI sometimes produces “hallucinations”, i.e. wrong answers.

In this session, we will go beyond the hype and find out if and how AI impacts your cybersecurity strategy.

According to Etay Maor, Senior Director Security Strategy at Cato Networks, “Generative AI can also help criminals. For example, they can use it to write phishing emails. Before ChatGPT, one of the basic detections of phishing emails was spelling mistakes and bad grammar.

Those were indicators that something was suspicious. Now, criminals can easily write a phishing email in multiple languages in perfect grammar.”

Unsupervised Learning

Unsupervised learning in ML means that the training data and the outcomes are not labeled. This approach allows algorithms to make inferences from data without human intervention, to find patterns, clusters and connections. Unsupervised learning is commonly used for dynamic recommendations, like in retail websites.

In cybersecurity, unsupervised learning can be used for clustering or grouping and for finding patterns that weren’t apparent before, for example it can help identify all malware with a certain signature originating from a specific nation-state.

It can also find associations and links between data sets. For example, determining if people who click on phishing emails are more likely to reuse passwords. Another use case is anomaly detection, like detecting activity that might indicate an attacker is using stolen credentials.

Unsupervised learning is not always the right choice. When getting the output wrong has a very high impact and severe consequences, when short training times are needed, or when full transparency is required, it’s recommended to take a different approach.

Supervised Learning

In supervised learning, the training data is labeled with input/output pairs, and the model’s accuracy depends on the quality of labeling and dataset completeness.

Human intervention is often required to review the output, improve accuracy,and correct any bias drift. Supervised learning is best suited for making predictions.

In cybersecurity, supervised learning is used for classification, which can help identify phishing and malware. It can also be used for regression, like predicting the cost of a novel attack based on past incident costs.

Supervised learning is not the best fit if there’s no time to train or no one to label or train the data. It’s also not recommended when there is a need to analyze large amounts of data, when there is not enough data, or when automated classification/clustering is the end goal.

Reinforcement Learning (RL)

Reinforcement Learning (RL) occupies a space between fully supervised and unsupervised learning and is a unique approach to ML.

It means retraining a model when existing training fails to anticipate certain use cases. Even deep learning, with its access to large datasets, can still miss outlier use cases that RL can address. The very existence of RL is an implicit admission that models can be flawed.

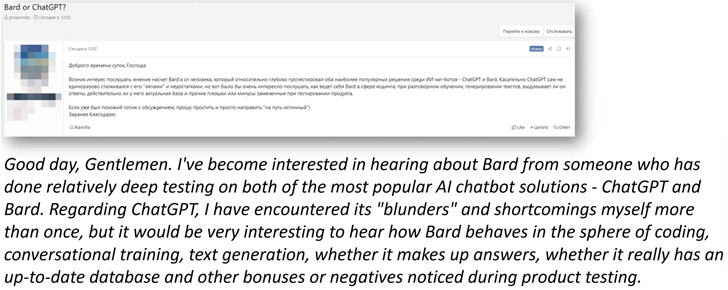

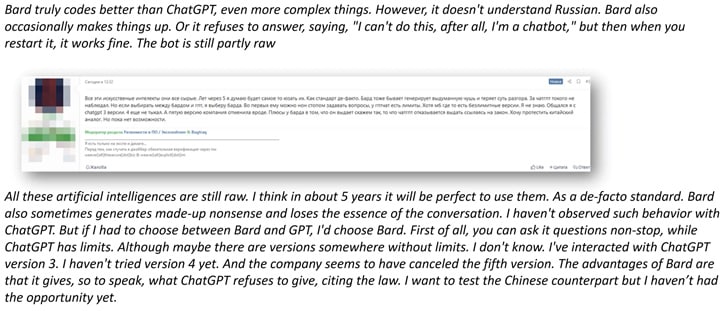

What Cybercriminals are Saying About Generative AI

Generative AI is of interest to cyber criminals. According to Etay Maor “Cyber criminals have been talking about how to use ChatGPT, Bard and other GenAI applications from the day they were introduced, as well as sharing their experience and thoughts about their capabilities. As it seems, they believe that GenAI has limitations and will probably be more mature for attacker use in a few years.”

Some conversation examples include:

The Artificial Intelligence Risk Management Framework (AI RMF) by NIST

When engaging with AI and AI-based solutions, it’s important to understand AI’s limitations, risks and vulnerabilities.

The Artificial Intelligence Risk Management Framework (AI RMF) by NIST is a set of guidelines and best practices designed to help organizations identify, assess and manage the risks associated with the deployment and use of artificial intelligence technologies.

The framework consists of six elements:

- Valid and Reliable – AI can provide the wrong information, which is also known in GenAI as “hallucinations”. It’s important that companies can validate the AI they’re adopting is accurate and reliable.

- Safe – Ensuring that the prompted information isn’t shared with other users, like in the infamous Samsung case.

- Secure and Resilient – Attackers are using AI for cyber attacks. Organizations should ensure the AI system is protected and safe from attacks and can successfully thwart attempts to exploit it or use it for assisting with attacks.

- Accountable and Transparent – It’s important to be able to explain the AI supply chain and to ensure there is an open conversation about how it works. AI is not magic.

- Privacy-enhanced – Ensuring the prompted information is protected and anonymized in the data lake and when used.

- Fair – This is one of the most important elements. It means managing harmful bias. For example, there is often bias in AI facial recognition, with light-skinned males being more accurately identified compared to women and darker skin colors. When using AI for law enforcement, for example, this could have severe implications.

Additional resources for managing AI risk include the MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems), OWASP Top 10 for ML and Google’s Secure AI Framework (SAIF).

Questions to Ask Your Vendor

In the near future, it will be very common for vendors to offer generative AI capabilities. Here is a list of questions to ask to support your educated choice.

1. What and Why?

What are the AI capabilities and why are they needed? For example, GenAI is very good at writing emails, so GenAI makes sense for an email system. What’s the use case for the vendor?

2. Training data

Training data needs to be properly and accurately managed, otherwise it can create bias. It’s important to ask about the types of training data, how it has been cleaned, how it is managed, etc.

3. Was resilience built-in?

Has the vendor taken into consideration that cybercriminals are attacking the system itself and implemented security controls?

4. Real ROI vs. Claims

What is the ROI and does the ROI justify implementing AI or ML (or were they added because of the hype and for sales purposes)?

5. Is it Really Solving a Problem?

The most important question – is the AI solving your problem and is it doing it well? There’s no point in paying a premium and incurring extra overhead unless the AI is solving the problem and working the way it should.

AI can empower us and help us perform better, but it is not a silver bullet. That’s why it’s important for businesses to make educated decisions about tools with AI they choose to implement internally.

To learn more about AI and ML use cases, risks, applications, vulnerabilities and implications for security experts.

Fonte: The Hacker News

Facial recognition for travel and onboarding top this week’s biometrics and digital ID news

The security impact of HTTPS interception in the wild

How to Secure Bitcoin Exchanges

INTERNATIONAL NEWS

Crypto ID publishes international articles about information security, digital transformation, cyber security, encryption and related topics.

Please check here!

Você quer acompanhar nosso conteúdo? Então siga nossa página no LinkedIn!